NIST Releases New Framework for Organizations Associated with AI Technologies

On January 26, 2023, the National Institute of Standards and Technology (NIST) released the Artificial Intelligence Risk Management Framework (AI RMF 1.0). According to NIST, the framework was developed in collaboration with private and public sectors and is meant to be a voluntary resource for organizations designing, developing, deploying, or using AI systems.

AI is a rapidly developing technology with benefits that can make a significant impact on how we live. From healthcare to transportation to cybersecurity, AI can improve efficiencies and drive economic growth. However, no matter how careful we are, AI comes with risks—including privacy violations, data breaches, and augmenting biases and inequalities that already exist in our society.

NIST created AI RMF 1.0 to enhance trustworthiness within AI systems. It provides a flexible approach that can be adjusted for organizations of all sizes and sectors to measure and manage their AI risks, including policies, practices, implementation plans, indicators, measurements, and expected outcomes. For example, AI RMF 1.0 can aid healthcare organizations in delivering valid and reliable results to patients while ensuring the privacy and protection of their personal information.

At the AI RMF 1.0 launch, Don Graves, Deputy Secretary of the US Department of Commerce said, “This voluntary framework will help to develop and deploy AI in ways that enable organizations, the US, and other nations to enhance AI trustworthiness while managing risks based on our democratic values. It should help to accelerate AI innovation and growth while advancing—rather than restricting or damaging—civil rights, civil liberties, and equity.”

How it Works

AI RMF 1.0 was developed through a collaborative process with over 240 organizations that included a Request for Information, several draft versions for public comments, multiple workshops, and other opportunities to provide input.

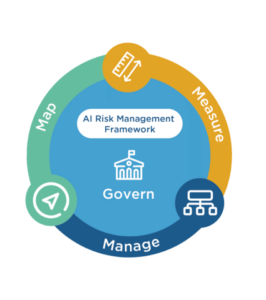

The framework is composed of four functions, 19 categories, and 72 subcategories. These functions can be used in any order, however, the Govern function is most commonly used first, followed by Map, Measure, and Manage.

Let’s take a look at a breakdown of the four functions.

- GOVERN—A cross-function that is infused throughout AI risk management and enables the other functions of the process. Aspects of GOVERN should be integrated into each of the other functions as a continual requirement for effective AI management.

- MAP—Establishes the context to frame risks related to an AI system. The MAP function is intended to enhance an organization’s ability to identify risks and broader contributing factors and is used as the basis for the MEASURE and MANAGE functions.

- MEASURE—Employs quantitative, qualitative, or mixed-method tools, techniques, and methodologies to analyze, assess, benchmark, and monitor AI risk and related impacts. The MEASURE function uses knowledge identified in the MAP function and informs the MANAGE function.

- MANAGE—Entails allocating risk resources to mapped and measured risks on a regular basis and as defined by the GOVERN function. After completing the MANAGE function, plans for prioritizing risk and regular monitoring and improvement will be in place.

Getting Started

NIST has provided multiple ways to engage with the new framework and a few educational resources on how to get started. Those resources include:

- A NIST AI RMF Playbook;

- The AI RMF Roadmap and AI RMF Crosswalk;

- Perspectives from various organizations and individuals, and;

- An AI RMF video explainer.

NIST intends to evolve the framework as AI advances and will update the playbook twice per year. Organizations are encouraged to provide feedback on how the framework is working and to tailor its features to their specific environments.

Dr. Alondra Nelson, Deputy Assistant to the President said, “The AI Risk Management Framework honors that legacy and advances that critical work for this era of American innovation. The framework gives us practical guidance on how to map, measure, manage, and govern AI risks. It guides us on the characteristics that can make AI safe and secure, fair and accountable, and protective of our privacy.”

At BARR, we look forward to the development of this new framework and will continue to advocate for improved risk management as it relates to AI and other online entities.

Interested in more information on how BARR can help your organization manage security risks? Contact us today.